15,714 reads

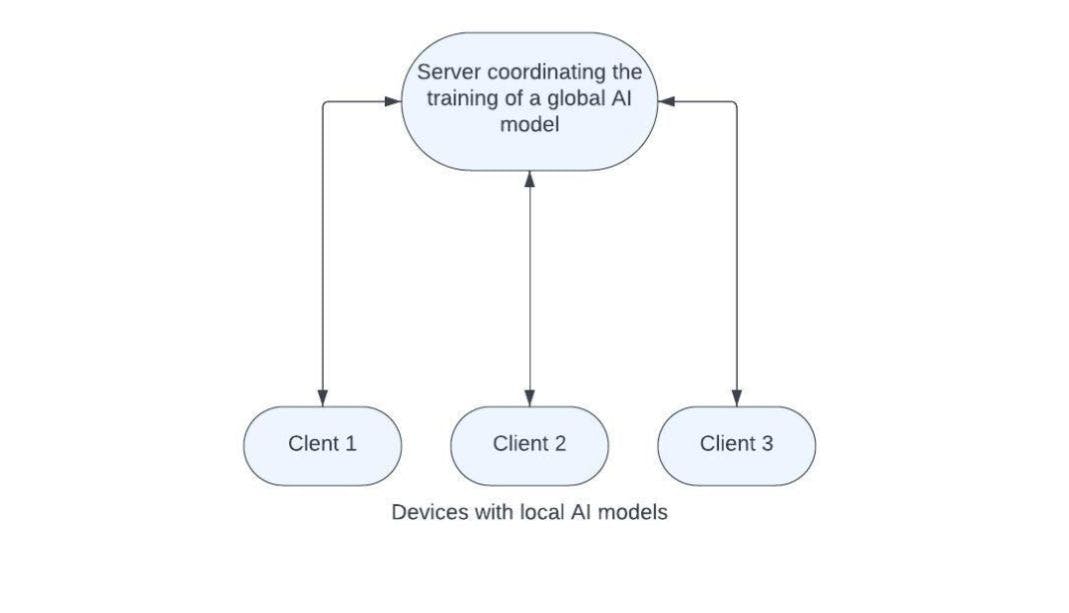

Federated Learning Reimagined: Advancing Data Privacy in Distributed AI Systems

by

February 21st, 2024

Audio Presented by

For almost 20 years, I have been building and managing software products focused on privacy protection

Story's Credibility

About Author

For almost 20 years, I have been building and managing software products focused on privacy protection