802 reads

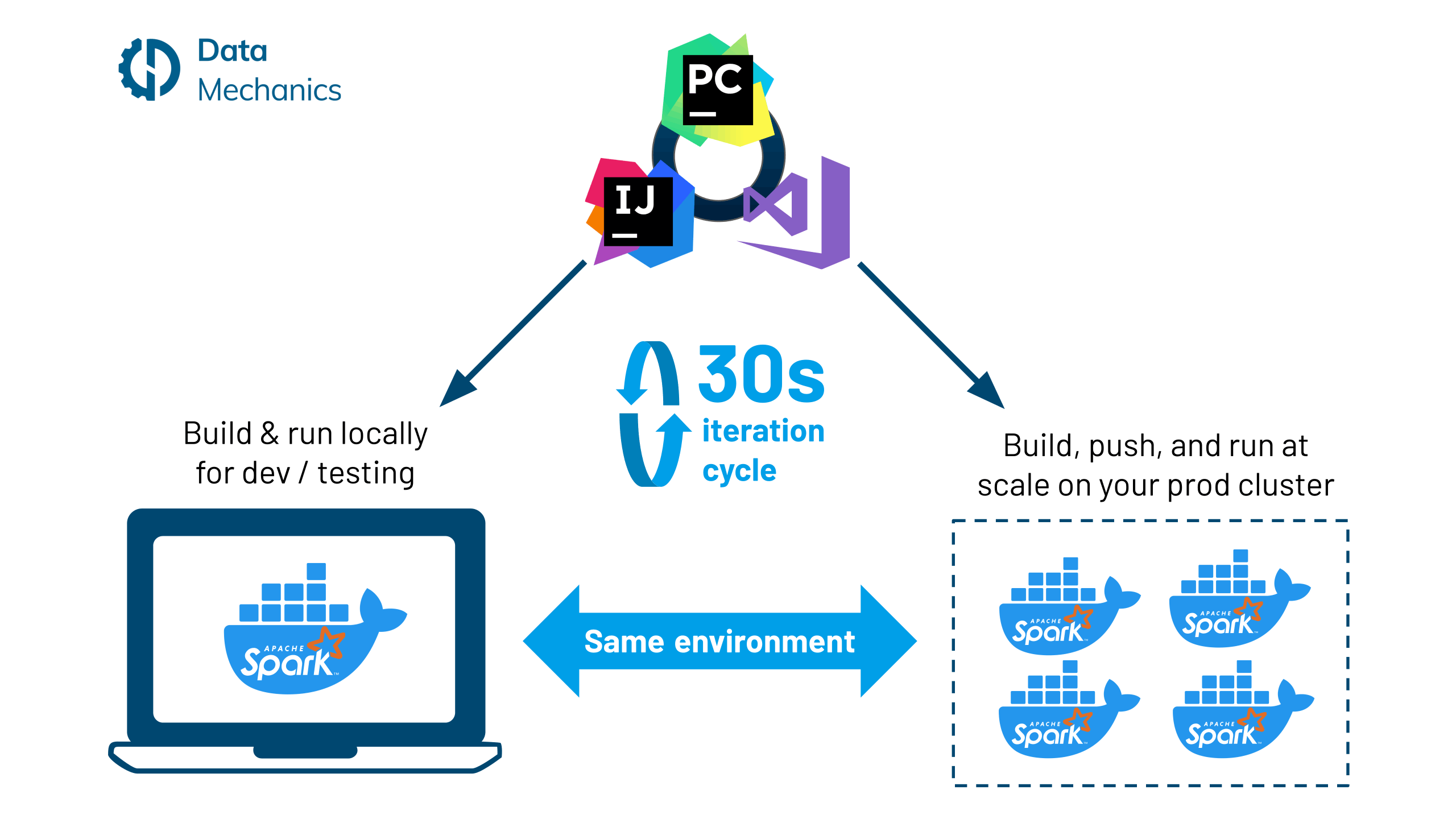

Docker Dev Workflow for Apache Spark

by

December 19th, 2020

Co-Founder @ Data Mechanics (www.datamechanics.co). Previously software engineer @ Databricks.

About Author

Co-Founder @ Data Mechanics (www.datamechanics.co). Previously software engineer @ Databricks.