339 reads

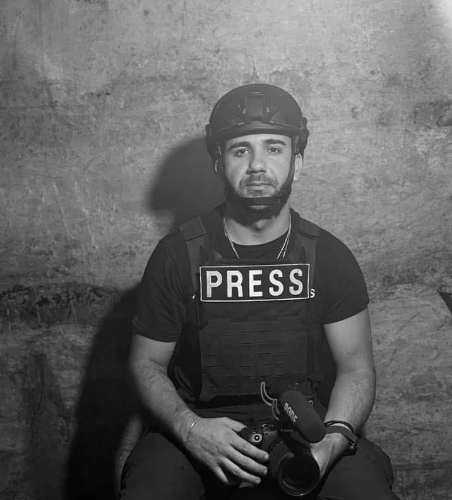

Social Media and the Battlefield: Human Error in Modern Warfare

by

May 14th, 2024

Audio Presented by

David writes about culture, cyberspace, digital currencies, economics, foreign affairs, psychology, and technology.

Story's Credibility

About Author

David writes about culture, cyberspace, digital currencies, economics, foreign affairs, psychology, and technology.