138 reads

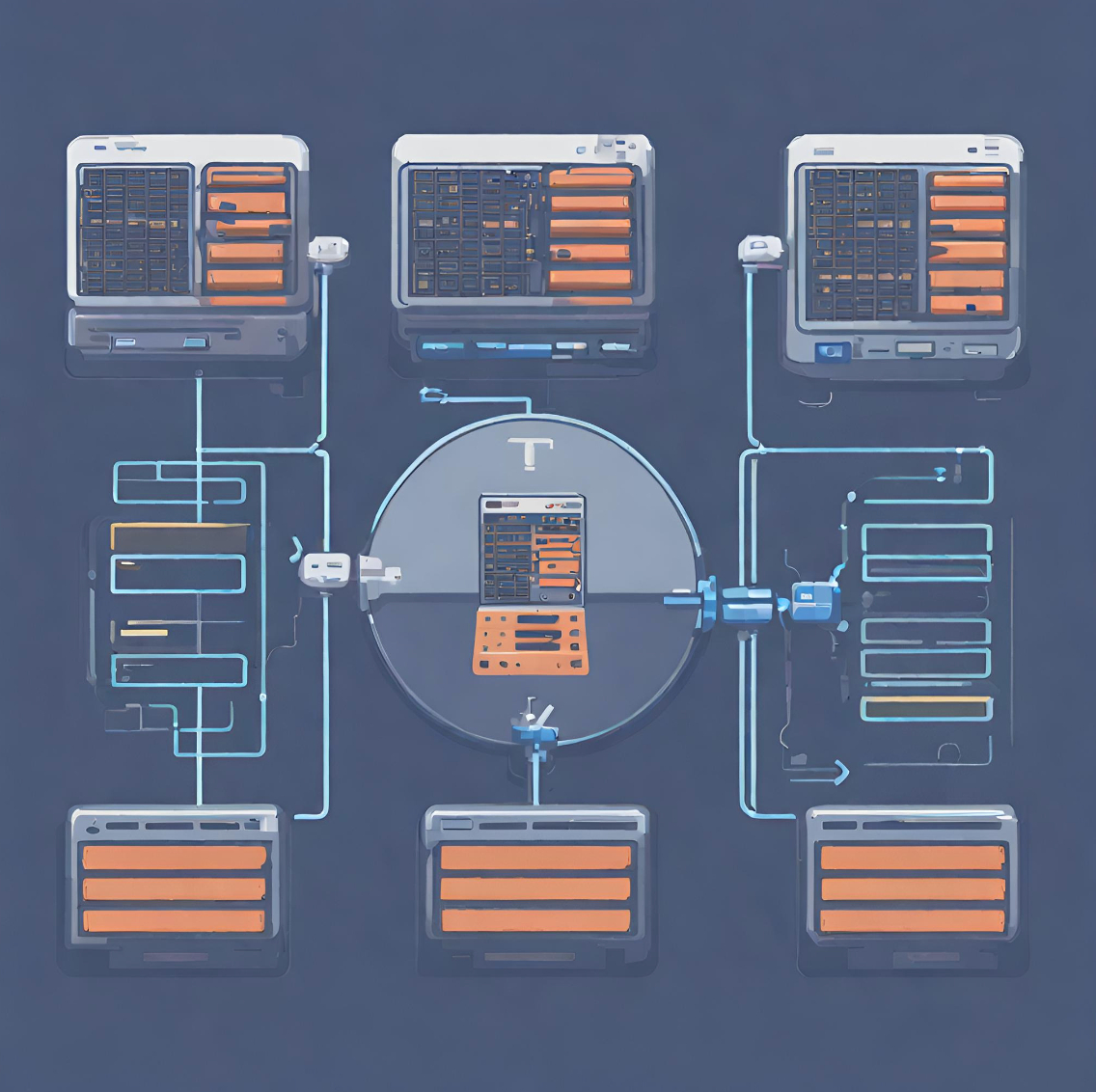

Load Balancing For High Performance Computing Using Quantum Annealing: Grid Based Application

by

July 3rd, 2024

Audio Presented by

Workloads align, resources harmonize, Load Balancing's careful orchestration ensures no single point of overload.

Story's Credibility

About Author

Workloads align, resources harmonize, Load Balancing's careful orchestration ensures no single point of overload.