107 reads

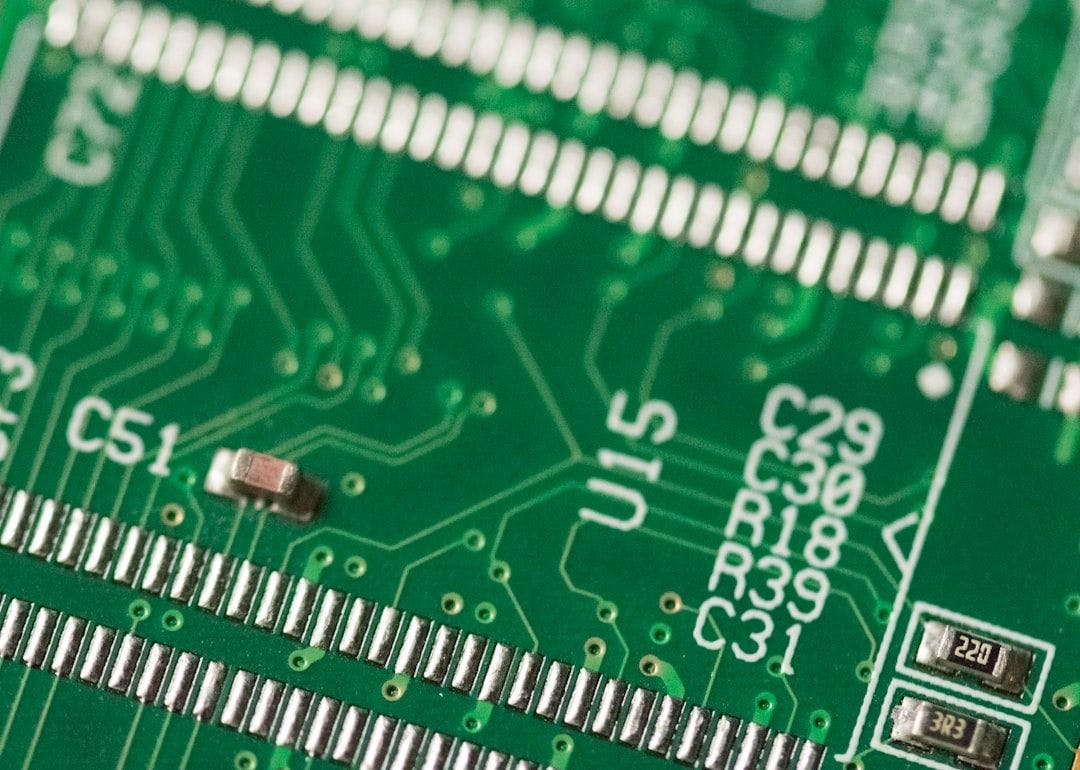

Large Language Models on Memory-Constrained Devices Using Flash Memory: Results for Falcon 7B Model

by

July 31st, 2024

Audio Presented by

Optimizing capacity with Knapsack, efficiently packing valuable essentials for a lighter and more sustainable journey fo

Story's Credibility

About Author

Optimizing capacity with Knapsack, efficiently packing valuable essentials for a lighter and more sustainable journey fo