Interface and Data Biopolitics in the Age of Hyperconnectivity: Conclusions and References

by

August 29th, 2024

Audio Presented by

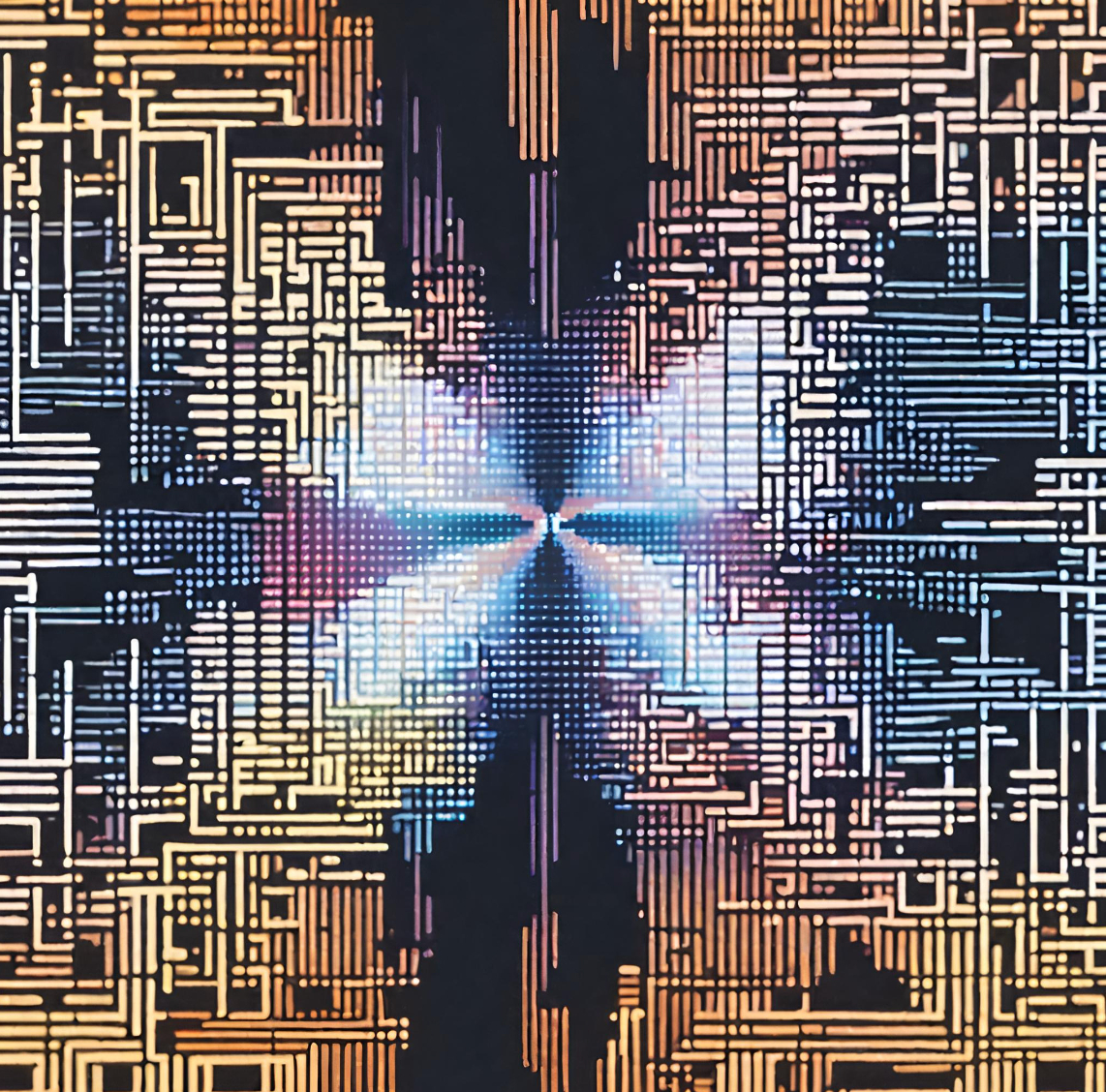

HyperConnectivity weaves a vibrant digital tapestry, blurring boundaries and multiplying possibilities.

Story's Credibility

About Author

HyperConnectivity weaves a vibrant digital tapestry, blurring boundaries and multiplying possibilities.