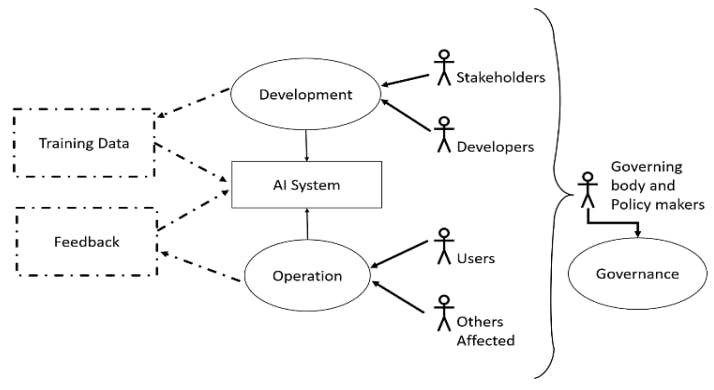

Operationalizing Diversity and Inclusion Requirements for AI Systems: Results

by

July 3rd, 2024

Audio Presented by

No technological innovation comes without sacrifice. The pendulum will swing back to the people! Wanna' be 501c3.

Story's Credibility

About Author

No technological innovation comes without sacrifice. The pendulum will swing back to the people! Wanna' be 501c3.