156 reads

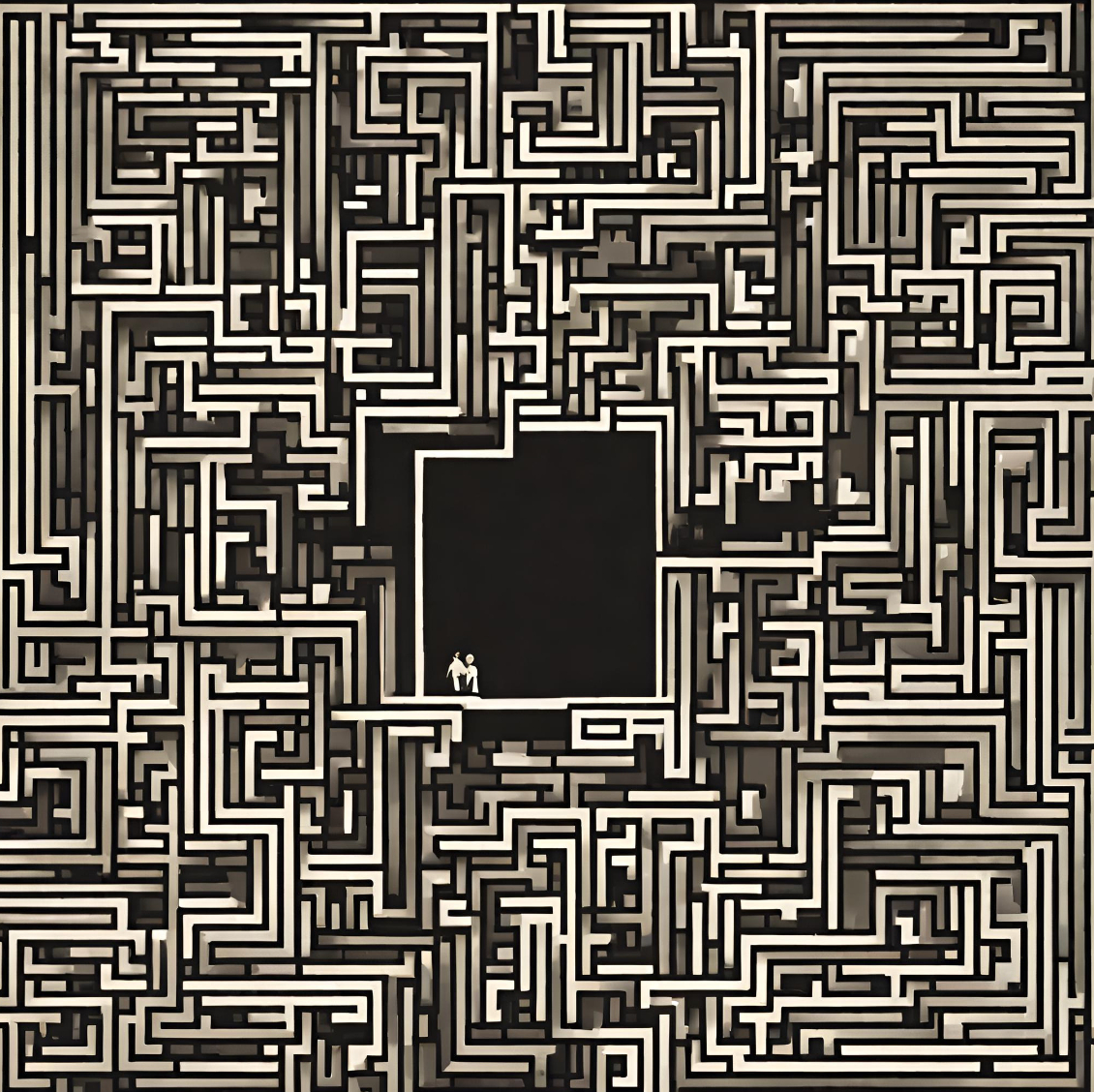

Efficient Detection of Defects in Magnetic Labyrinthine Patterns: Methodology

by

September 18th, 2024

Audio Presented by

A journey through the mind's own pace, unraveling the threads of thought, in the labyrinth's spiraling heart.

Story's Credibility

About Author

A journey through the mind's own pace, unraveling the threads of thought, in the labyrinth's spiraling heart.