106 reads

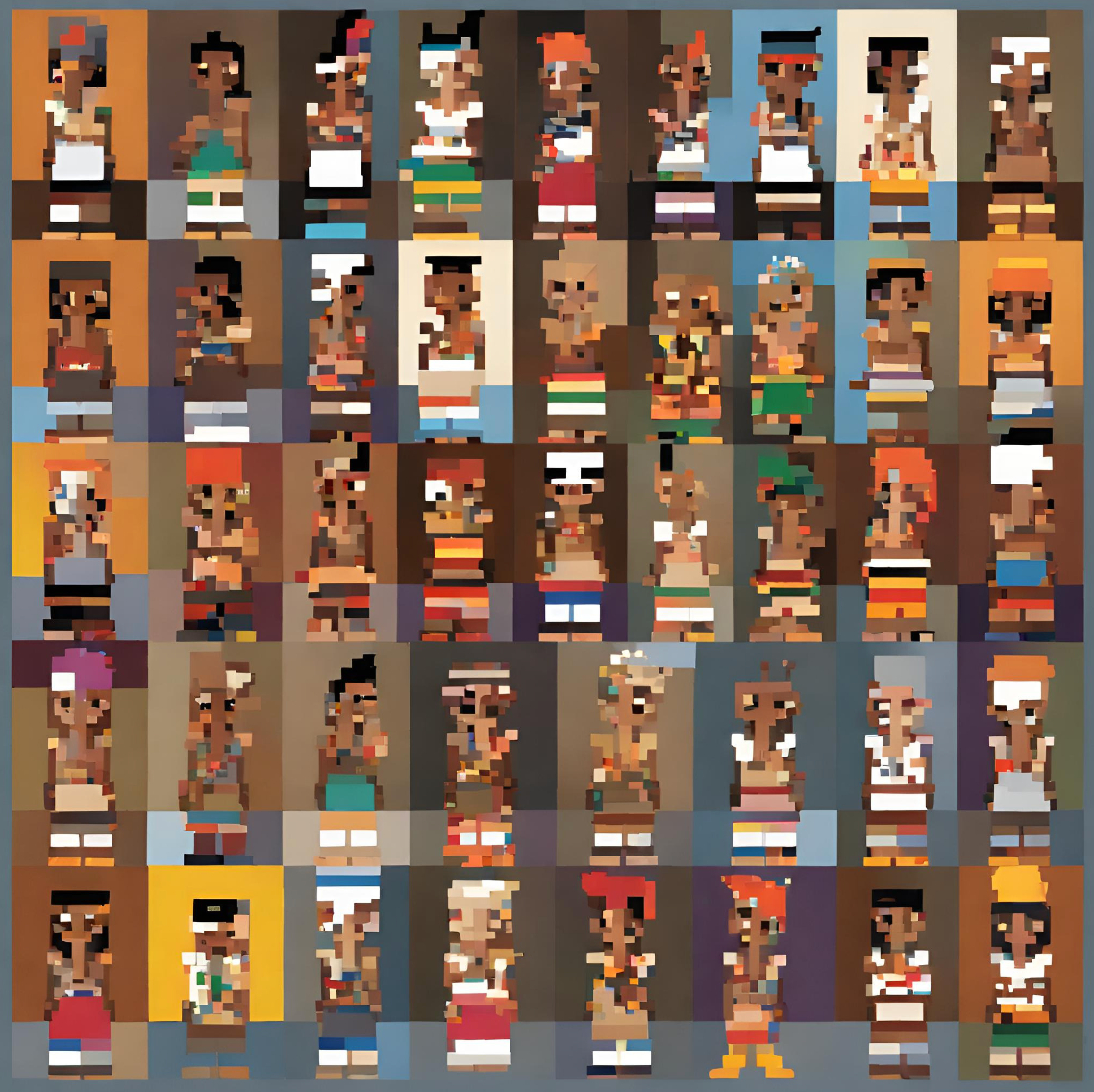

AI Design Must Prioritize Adaptation to Human Culture, Trust, and Ethics

by

December 19th, 2024

Audio Presented by

Ethnology Technology decodes the cultural ripple effects of technology, from societal shifts to personal connections.

Story's Credibility

About Author

Ethnology Technology decodes the cultural ripple effects of technology, from societal shifts to personal connections.