206 reads

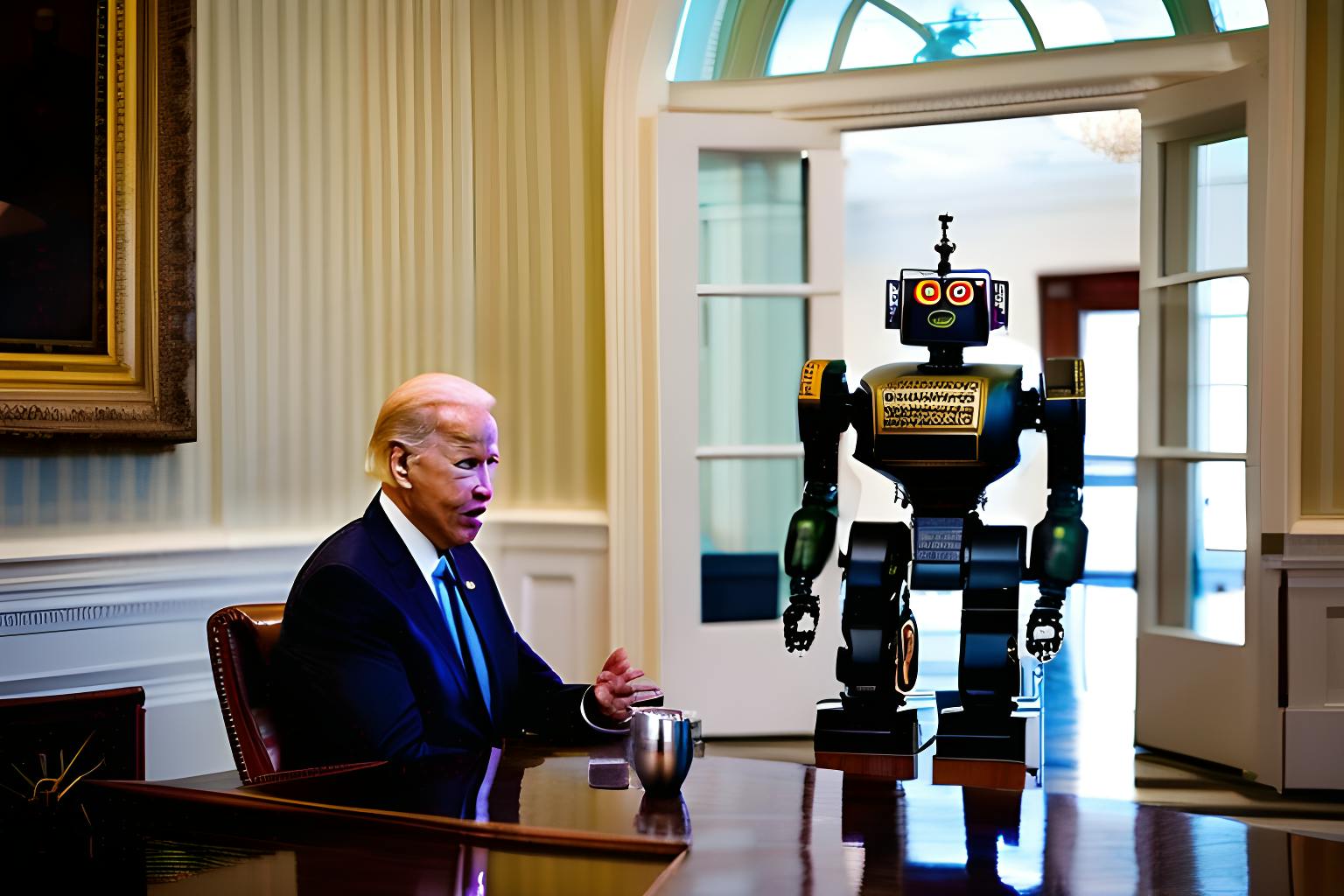

Biden Charges Federal Government Agencies to Adopt AI

by

November 8th, 2023

Audio Presented by

The White House is the official residence and workplace of the president of the United States.

About Author

The White House is the official residence and workplace of the president of the United States.